In our previous article, we looked at memory usage on a DigitalOcean server with a Ghost blog installed. The goal was to understand why the RAM usage was almost above 50% with 2GB.

Following a brief investigation, we were able to reduce its usage by 20% by properly configuring my.cnf on Ubuntu. It was about optimizing mysqld, which was the main player in the server's memory usage.

In this article, we want to revisit the concepts of cache used by default on Linux and help you understand how they are utilized.

Disk Caching: Everything you need to know

Linux uses a mechanism called "disk caching" to improve system performance and this is where the kernel borrows unused memory for caching data that is read from the disk. This phenomenon may give the impression that available "free" memory is low; however, this is not the case. The system is functioning optimally, utilizing unused memory for disk caching to enhance performance without compromising resource availability.

Purpose of Disk Caching: The main purpose of disk caching is to speed up access to data stored on a disk. Disk reads and writes are much slower than operations involving RAM. By storing frequently accessed data in RAM, the system can reduce the need to access the disk, thereby speeding up overall operations.

Usage of Unused Memory: In Linux, memory that is not currently being used by processes may be used for the disk cache. This is a dynamic process, meaning that Linux will use more memory for disk caching when it is available but will release it when applications need more memory. This ensures that system memory is utilized efficiently.

Visibility: Users might observe that Linux systems often show a high percentage of memory usage even when the system is not running many applications. This is typically due to disk caching. Tools like free and top in Linux provide information about memory usage, including how much is being used by the cache.

Reclaiming Memory: The memory used for caching is not permanently taken away from other applications. It is marked as available, so if an application needs more memory than what is free, the kernel will reduce the cache size to accommodate the new memory demands.

Impact on Performance: The use of disk caching significantly improves system performance, especially for applications that need to read from or write to the disk frequently. By reducing disk I/O, the overall system responsiveness improves.

Tuning Cache Settings: Advanced users and system administrators can tune the behavior of the disk cache via sysctl settings or other configuration methods. This allows for customization based on specific needs or performance goals.

Swap memory: Required in that situation?

Determining whether you need more swap space on your Linux system depends on several factors including your current system usage, the applications you run, and your overall system configuration. Here’s a quick guide to help you decide if you need to increase your swap space:

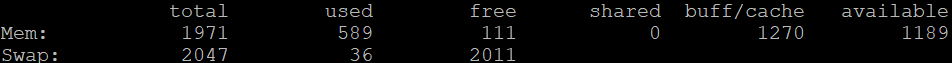

- Current Swap Usage: Check how much of your swap space is currently being used. You can use the

free -mcommand to see this. If your swap usage is consistently high, that might be a sign you need more swap space. - System Performance: If your system is slow or applications frequently crash due to out-of-memory errors, this can be a sign that your existing memory (RAM and swap) isn't sufficient.

- Amount of RAM: Systems with smaller amounts of RAM might benefit from more swap space, especially if running memory-intensive applications. Conversely, if you have a lot of RAM, your system might rarely use swap, unless running particularly demanding software or many applications at once.

- Type of Applications: Some applications, particularly those that handle large datasets (like video editing, simulation software, or databases), might require more swap space than what would typically be suggested for the average system.

- Suspend and Hibernate: If you use features like hibernate (which stores the content of RAM to swap space to power off your machine completely), you need at least as much swap as you have RAM.

- General Guidelines: A traditional rule of thumb for swap space was to have twice the amount of RAM, but this rule is largely outdated, especially for systems with large amounts of RAM (e.g., 16GB or more). For modern systems, a swap size equal to or half the size of the RAM can be sufficient.

- Kernel Swappiness: The

swappinessparameter controls how aggressively the kernel will use swap space. Before adding more swap, you might adjust this parameter to make better use of your current swap space. You can check and adjust the swappiness value, which ranges from 0 to 100, where a lower value reduces swap usage and a higher value increases it.

To check the swappiness value:

To temporarily change the swappiness value (e.g., to 10):

To make the change permanent, add the following line to /etc/sysctl.conf:

If you decide more swap is necessary, you can add swap by creating a new swap file or partition, or by increasing the size of the existing swap partition. Keep in mind that swapping is considerably slower than RAM, and excessive swapping can degrade system performance. Therefore, adding RAM might be a better solution for performance issues related to low available memory.

Disk Caching: Get me out of it, please!

Stopping Linux from using disk caching is generally not recommended as it is a critical feature for optimizing system performance. However, if you have a specific need to control or reduce the amount of memory used for caching, there are a few approaches you can take:

Adjust the vm.drop_caches Setting: You can manually clear the cache without restarting the system. This does not disable caching, but it clears the cache temporarily. You can write to the vm.drop_caches file in the /proc/sys/vm directory to clear different types of caches:

To clear pagecache:bash

To clear dentries and inodes:

To clear pagecache, dentries, and inodes:

Note that this is only a temporary solution and the system will begin caching again immediately after.

Tune Cache Pressure: You can adjust the vm.vfs_cache_pressure setting. This setting controls the tendency of the kernel to reclaim the memory which is used for caching of directory and inode objects. Increasing this value will cause the kernel to prefer reclaiming caches, thus reducing their size:

To change the cache pressure:

This is more of a tuning method than a complete disabling of caching.

Limiting Cached Memory: Some system administrators use cgroups (Control Groups) to limit the amount of memory used by certain processes, including how much can be used for cache. This is a complex method requiring detailed setup and is typically used in environments needing fine-grained resource control.

Kernel Modification: In the most extreme cases, it is theoretically possible to modify the Linux kernel to change or disable caching behavior. This is highly advanced and not advisable as it can lead to significant stability and performance issues, and it would require maintaining a custom kernel.

For most users and use cases, rather than trying to disable disk caching, it's better to investigate why excessive caching might be an issue and consider whether there might be other solutions to the problem, such as adding more RAM or optimizing application performance.

How to understand both TOP and FREE tools outcome?

This issue stems from a variation in terminology. You and Linux concur that memory actively utilized by applications is considered "used," while memory not allocated to any specific task is labeled as "free."

However, what about memory that is currently employed for certain functions but can be repurposed for applications if needed?

You might refer to this memory as "free" and/or "available." Linux, on the other hand, categorizes it specifically as "available." This distinction highlights how Linux manages and optimizes memory usage, ensuring there’s a buffer that can be quickly allocated to applications as required.

How to know the size of free memory currently available?

To check how much RAM is available for your applications to use without resorting to swap space, you can use the command free -m and pay attention to the "available" column. This column shows the amount of memory that can be immediately used by applications without needing to swap any data to disk.

How to know when my Linux system is in danger?

A healthy Linux system typically displays several positive indicators that suggest it is functioning optimally. Here are some key symptoms to look for:

- The "free memory" might be close to zero, which is normal in Linux as it tries to use as much RAM as possible for data buffering.

- The "available memory," which includes both free memory and memory in buffers and cache, should have ample space—ideally, more than 20% of the total RAM. This indicates there’s enough memory available for new applications without stressing the system.

- The "swap used" should remain stable; significant changes or increases could indicate that the system is running low on available physical memory, causing it to use swap space more frequently, which could slow down system performance.

When observing system memory indicators:

- If the "available memory" (which includes 'free' memory along with memory used for buffers and cache) is close to zero, this suggests that your system is running low on memory resources.

- If you notice that the "swap used" metric is increasing or fluctuating significantly, this could be a sign that your system is struggling to manage its memory and is resorting to using swap space more frequently to compensate.

- Running the command

dmesg | grep oom-killerand finding entries for the OutOfMemory-killer indicates that the system is forcibly closing processes to free up memory. This is a clear sign of memory pressure and that the system is unable to maintain stable operation with the available memory.

I can't sleep at night because of my Linux system

We always say that less is more but here are some extra information if you want to make sure any Linux system is healthy from head to toe.

Stable Performance: The system responds quickly to user inputs and executes tasks efficiently. There are no unexpected delays or lag, which are often indicative of underlying issues.

- Low System Load: The system load averages (viewable using the

uptimecommand or tools likehtop) are reasonable relative to the number of CPU cores. A healthy system should not consistently have a load average that far exceeds the number of cores for extended periods. - Minimal Swap Usage: While some swap usage is normal, especially on systems with limited RAM or when running large applications, a healthy system should primarily use physical RAM and not rely heavily on swap space, as excessive swapping can lead to decreased performance.

- No Frequent Disk Thrashing: Disk thrashing occurs when the system is constantly swapping data between RAM and disk, which can significantly slow down performance. Observing disk activity can provide insights; tools like

iostatcan be helpful. - Manageable Memory and Disk Usage: Memory usage should be stable with sufficient free or available memory for new applications without constant memory exhaustion. Disk usage should also be within limits, with plenty of free space on all partitions, especially

/,/var, and/tmpwhich are critical for system operations. - Healthy Hardware Status: Check for any signs of hardware issues such as overheating CPUs, failing disks, or RAM errors. Tools like

smartctlfor disk health and system logs can help identify hardware-related errors. - No System Errors or Critical Logs: Review system logs regularly (

/var/log/syslog,/var/log/messages, etc.) for any errors or critical warnings that might indicate problems. A healthy system should not be generating frequent critical error messages. - Regular Updates and Maintenance: The system should be kept up to date with the latest security patches and software updates. A healthy system is regularly maintained, with package updates and security patches applied.

- Effective Network Performance: The network connections should be stable without frequent drops or high latency, which can indicate network configuration issues or hardware problems.

- Reliability and Uptime: The system should be able to run continuously without unscheduled reboots or downtime. Long uptimes, while not always necessary, can indicate a stable and reliable system.

If you need assistance, visit us at www.arfy.ca and drop us a line. We will be happy to jump in a call and share our knowledge.